Using AI to learn faster

How can we use these new developments to learn better, faster?

I spent 30 minutes learning about synthetic proteins and protein folding. What follows may or may not be entirely accurate. Take it with a heap of skepticism.

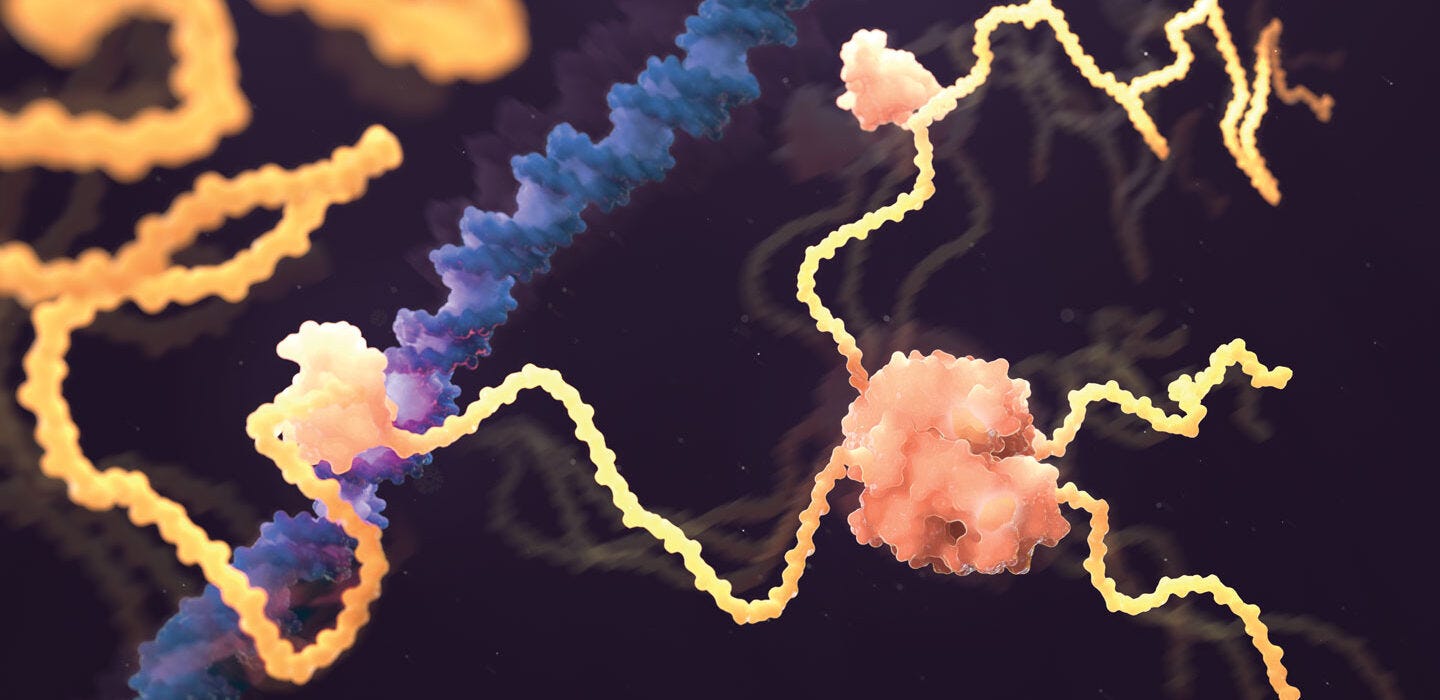

One of life’s mysteries is how proteins get their shape.

If we can figure this out, we can create better drugs and even more efficient fuels. But in order to do this, we need to understand the shape of specific proteins. This is the protein folding problem.

Everything in our body is made up of proteins. There's a reason it's called the building block of life.

Proteins combine with other proteins in the body to form different protein complexes, making their shape extremely important. Think about a puzzle and having a tab be even the slightest bit different. Having a protein be one molecule off is not good enough.

Its shape is created based on the properties of the specific amino acids used in the protein such as the bonds they form and the polarity of specific molecules.

So, can't we just look at a protein to determine its shape? We sequenced the whole genome, right?

A protein's shape is very hard to predict.

DNA is used as instructions to assemble amino acids into proteins. As this happens, proteins begin to fold into complex 3D structures that are nearly impossible to predict. There are more potential configurations of a single protein than there are atoms in the universe.

Traditionally we used NMR Spectroscopy or XRay Crystallography to predict protein structures, but these methods took weeks or months and used expensive equipment.

AlphaFold2, a neural network released around 2020, was DeepMind's answer to this. Using a two-part process, it was able to accurately (relatively speaking) predict the shape of proteins. Instead of simply using a larger NN, it chained two together – one to predict if the secondary protein structure was beta sheets or alpha-helical structures of proteins and a second to assemble those into their 3D shapes.

Our goal right now is to recreate standard proteins so we can begin to explore new, synthetic ones.

If I had more time, I would next go deeper into how AlphaFold2 works and how the different properties (hydrogen bonds, hydrophobic interactions, electrostatic interactions) impact the shape.

How can you learn things faster?

This weekend students did their best to go technically deep on a new topic in just 40 minutes. It's not easy. We gave them some recommended resources and away they went.

Google Scholar

Google Patents

YouTube

Chatgpt

In the same spirit, I gave myself a slightly shorter timeline and attempted it. For my own learning, I began with a couple of YouTube videos on protein folding and then moved into ChatGPT to begin asking it some questions I had on the topic. From there I found a recent paper and started reviewing it on Explainpaper before running out of time.

Thirty minutes isn't much but it's enough to gain some understanding. Where I found students went wrong is diving right into the complexity (paper, patents) without first understanding the high-level concepts. Reading a paper about exosomes as new biomarkers in liquid biopsies won't be useful if you don't actually understand the procedure's purpose.

I find YouTube to be the best starting point to get a quick birds-eye view of the topic, often supported with visuals to help with understanding. From there you might start to get questions you can dive deeper into.

New AI tools like Explainpaper and Elicit pair extremely well with Google Scholar as you can find recent papers on a topic and use AI to explain some of its concepts or language. You can even interrogate the paper to improve your learning.

From there, we want to try to reinforce the knowledge. The best way to do this is to attempt to teach others1.

Reinforcing Knowledge with the Feynman Technique

The best place to start is to use the Feynman Method, named after physicist Richard Feynman.

Write out everything you know on the topic as if you're teaching someone. It helps to actually speak it at the same time as if you’re explaining to a group. Take note of the areas you're stumbling or are unclear on. This is a sign you don't really understand it yet and points to where you need to learn more.

Go back to learning. Fill in your gaps.

Try it again.

If you repeat this process, filling in the holes in your knowledge, you will have a stronger grasp of the topic and be able to explain it clearly and simply, a mark of true understanding.

The second trick I really like is to have a conversation with someone curious who knows nothing about the topic and teach them about what you learned. Encourage them to ask you questions. Questions are where you really get tested because it takes you off script – where are your gaps?

These techniques show us why writing is such a powerful learning tool. Not taking notes, but synthesizing your knowledge into something that explains the concept.

Writing is actually for your benefit, not others.

Write more; learn better.

Getting out of the lab

When we are working on something new, we tend to avoid getting feedback because deep down, we're scared of being told we've been wasting time on the wrong path. This won't surprise you if you've heard of the sunk cost fallacy. It can be hard to give up all that effort. But instead of putting it off, we must face our fears as early as possible.

The faster we find out where we're wrong, the less time we waste.

Astro Teller, head of Google X's Moonshot Factory, said we should ask ourselves "What will help me learn the fastest about this?" By focusing on learning instead of being right, we'll move faster and learn from real-world feedback. At X they say, “make contact with the real world early”.

Real-life experiences beat out fancy plans every time. We can come up with a hundred ideas and do tons of research, but until we show our ideas to customers, we won't know if they're any good. The real test is getting honest feedback and seeing how things work in the real world.

We have to learn to rip off the bandaid and embrace that feedback because all learning is good learning.

What are you avoiding feedback on?

✌🏼

Exactly why I wrote the protein folding section.

AI as you've come to realize provides new opportunity for a better and different online learning experience. I'm working on this, inspired by Stephen Wolfram's blog post where he makes the argument for matching ChatGPT's natural language, knowledge and intelligence with the Wolfram Language's computational capabilities.

The first subject I worked on is history... biology to follow next.

Check it out at https://store.warmersun.com/l/AILC_History